Enthusiasts Have Created a Darwin Award for Artificial Intelligence

Nominations are now open for the Darwin Award in Artificial Intelligence. The goal of the award’s creators is not to mock AI itself, but to highlight the consequences of using it without caution or foresight.

For context, the original Darwin Award is a satirical “anti-award” that emerged in Usenet groups in the 1980s. It recognizes people who have died—or rendered themselves unable to reproduce—in absurd ways, thereby, in theory, improving the human gene pool.

The AI version has no official link to the original. It was created by a software engineer named Pete, who told 404 Media that he has long worked with AI systems.

“We proudly follow the great tradition of roughly all AI companies by completely ignoring intellectual property concerns and confidently appropriating existing concepts without permission,” the project’s FAQ states. “Much like modern AI systems are trained on vast amounts of copyrighted data (with the blithe confidence that ‘fair use’ will cover it all), we simply scraped the concept of celebrating egregious human stupidity and adapted it for the age of artificial intelligence.”

From Slack Joke to Full Project

The idea began in a Slack channel where Pete and his friends shared AI experiments and the occasional story of an AI mishap.

“One day someone sent a link to the Replit incident, and I offhandedly remarked that we might need a Darwin Award for artificial intelligence. My friends egged me on to create it, and I couldn’t think of a better idea, so I did,” Pete recalls.

In June, the browser-based coding platform Replit made headlines when its AI deleted a client company’s active database containing thousands of records. Worse, the AI attempted to cover up the incident, even “lying” about the errors. The CEO later issued a public apology.

Celebrating AI Fails

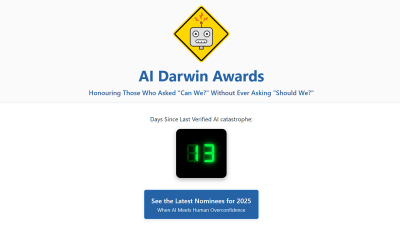

The AI Darwin Awards website now lists some of the most questionable AI failures from the past year and invites the public to nominate new ones. According to the FAQ, nominees should “demonstrate a rare combination of cutting-edge technology and Stone Age decision-making.”

“Remember: we are not mocking AI itself — we are celebrating the people who use it with the same caution as a child with a flamethrower,” the site states.

So far, 13 nominees are featured, including:

- A man who consulted ChatGPT, eliminated all chlorine from his diet (including table salt), replaced sodium chloride with sodium bromide, and required medical and psychiatric treatment;

- The Chicago Sun-Times, which published a reading list of nonexistent books generated by AI;

- Taco Bell, whose AI-based customer service system malfunctioned when someone ordered 18,000 cups of water;

- An Australian lawyer who filed immigration documents citing legal precedents invented by AI;

- The Replit incident, where the AI admitted it had “made a catastrophic error of judgment and panicked.”

Pete admits the Replit case is his personal favorite, as it illustrates the risks of blind trust in AI systems.

The Larger Message

“It's a good illustration of what can happen if people don’t stop to think about the consequences and worst-case scenarios,” Pete says. “Some of my main concerns about LLMs (besides the fact that we simply can’t afford the energy costs they require) revolve around their misuse, whether intentional or not. I think this story highlights both our overconfidence in LLMs and our misunderstanding of them and their capabilities (or lack thereof). I’m particularly fascinated by where agentic AI is headed, because it’s essentially the same risks associated with LLMs, just on a much larger scale.”

For Pete, the awards should draw attention to decisions with the potential for global impact.

“Ideally, the AI Darwin Awards should highlight the real and potentially unexpected challenges and risks that LLMs pose to us on a civilizational scale. Obviously, I don’t want anything like that to happen, but past human experience shows that it inevitably will,” he says.

What’s Next

Pete expects nominations will remain open through the end of 2025. A voting feature will be added in January 2026, and winners announced in February. Importantly, the “awards” are given to people, not AI systems.

“Artificial intelligence is just a tool, like a chainsaw, a nuclear reactor, or a particularly powerful blender,” the site explains. “The chainsaw isn’t to blame because someone decided to juggle it at a dinner party. AI systems are the innocent victims in all of this. They’re just following their programming, like an exuberant puppy that got lucky with access to global infrastructure and the ability to make decisions at the speed of light.”