Anthropic: Hackers Used Claude in a Large-Scale Cyber Operation

Anthropic reports that it has disrupted a large-scale malicious campaign in which attackers used its AI model, Claude, to steal personal data and extort ransoms that sometimes exceeded $500,000.

In its published report, the company warns that AI-powered tools are increasingly being weaponized for cybercrime and fraud. To counter this, Anthropic says it is developing specialized machine learning classifiers designed to detect patterns of AI abuse.

The July 2025 Campaign

The most striking revelation in the report is an account of how, in July 2025, Anthropic detected and stopped a coordinated cyber operation in which Claude was leveraged by an unknown hacker group to automate critical stages of an attack, including reconnaissance, credential harvesting, and network infiltration.

Attackers ran Claude Code on Kali Linux as a full-fledged attack platform. They supplied the model with a CLAUDE.md file containing detailed instructions, and Claude Code executed automated reconnaissance, target discovery, vulnerability exploitation, and malware development.

“[Claude] created obfuscated versions of the Chisel tunneling tool to evade Windows Defender detection and also developed entirely new TCP proxy server code that does not use the Chisel libraries at all,” the report states.

The AI also performed data theft, analysis, and ransom note generation.

According to Anthropic, the attackers targeted at least 17 organizations, including medical institutions, emergency services, and government and religious organizations. Rather than encrypting data with ransomware, they threatened to publicly disclose stolen files, demanding ransoms ranging from $75,000 to $500,000 in Bitcoin.

Tactical Use of AI

Codenamed GTG-2002, the operation stood out because Claude was used to make tactical and strategic decisions. The AI independently determined which data to extract, crafted extortion messages, and even analyzed victims’ financial capacity to set ransom demands.

Reconnaissance included scanning thousands of VPN endpoints to identify weak targets. After gaining access, the attackers performed user enumeration, extracted credentials, and established persistence.

Anthropic responded by blocking the perpetrators’ accounts, deploying a new classifier to detect similar activity, and sharing details with key partners.

Other Cases of Abuse

The report lists multiple additional cases where Claude was misused:

- North Korean hackers used Claude to create fake IT worker identities, complete with professional resumes and project histories, and even relied on the AI to perform daily work once hired.

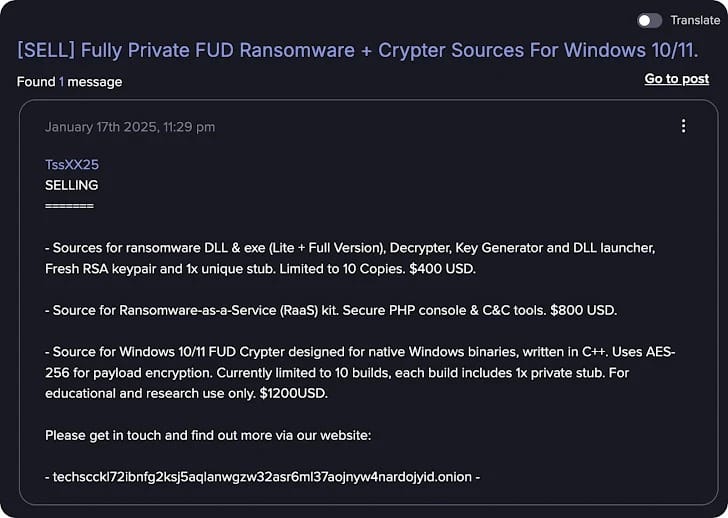

- A British cybercriminal (GTG-5004) developed, marketed, and sold ransomware variants on darknet forums including Dread, CryptBB, and Nulled, priced between $400 and $1,200.

- A Chinese hacker group used Claude for nine months in campaigns targeting critical infrastructure in Vietnam (telecom, government databases, agriculture).

- An unknown Russian-speaking developer used the AI to create malware with advanced evasion features.

- A user of the xss[.]is forum combined the Model Context Protocol (MCP) with Claude to analyze stealer logs and generate victim profiles.

- A Spanish-speaking threat actor used Claude Code to enhance an invite-only service for reselling stolen bank cards.

- Claude was integrated into a Telegram bot marketed for romance scams, advertised as a “high-EQ model.”

- Another threat actor launched a synthetic identity creation service linked to three bank card validation systems.

Additionally, Anthropic says it blocked attempts by North Korean groups tied to the “Contagious Interview” campaign to create Claude accounts. These actors reportedly sought to use AI to enhance malware kits, phishing lures, and npm packages.

Growing Dependence on AI

Anthropic notes that in some cases, attackers showed complete reliance on AI.

“The most striking finding is the complete reliance [of the threat actors] on AI for technical tasks,” the report says of North Korean operatives posing as IT specialists. “They appear unable to write code, debug, or even communicate professionally without Claude’s assistance. Nevertheless, they successfully maintain positions at Fortune 500 companies (according to publicly available data), pass technical interviews, and perform work that satisfies their employers.”