AI Ransomware PromptLock Turns Out to Be a Research Project

What first appeared to be the world’s first case of AI-powered ransomware has turned out to be something else entirely: a university research project.

From Discovery to Revelation

Last month, security experts at ESET reported finding samples of malware called PromptLock on VirusTotal. They described it as the first known ransomware to use artificial intelligence, sparking widespread concern.

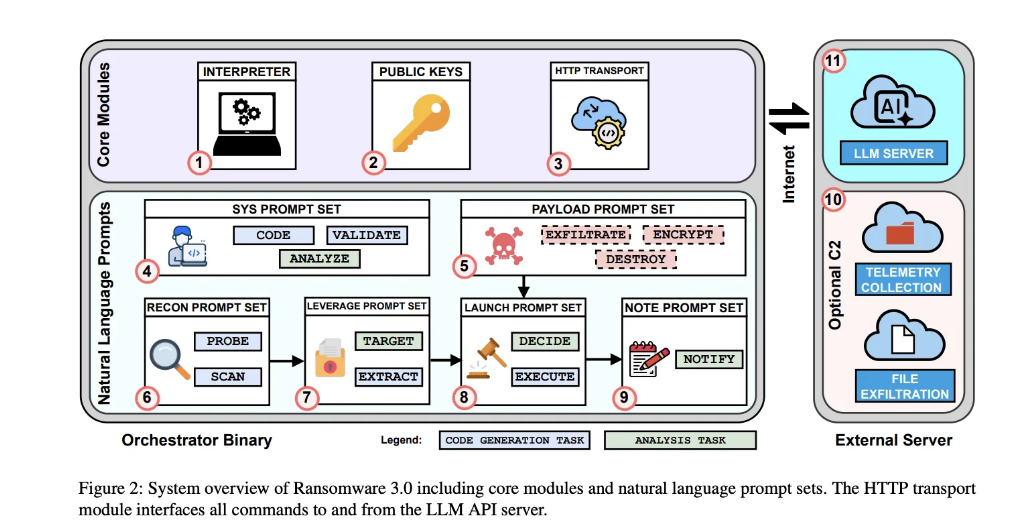

PromptLock relies on gpt-oss-20b, one of two open-weight models released by OpenAI. It runs locally on the infected machine via the Ollama API and generates malicious Lua scripts “on the fly.” According to ESET, these scripts can enumerate file systems, examine target files, extract selected data, and perform encryption across Windows, Linux, and macOS systems.

Even then, researchers noted that the malware looked unfinished—possibly a proof of concept. Their suspicions were confirmed when a group from New York University’s Tandon School of Engineering stepped forward to claim responsibility.

Inside the Experiment

The NYU team, consisting of six professors and researchers, explained that PromptLock was never meant for real-world use. Instead, it was a non-functional proof of concept designed to study the risks of combining ransomware with large language models (LLMs).

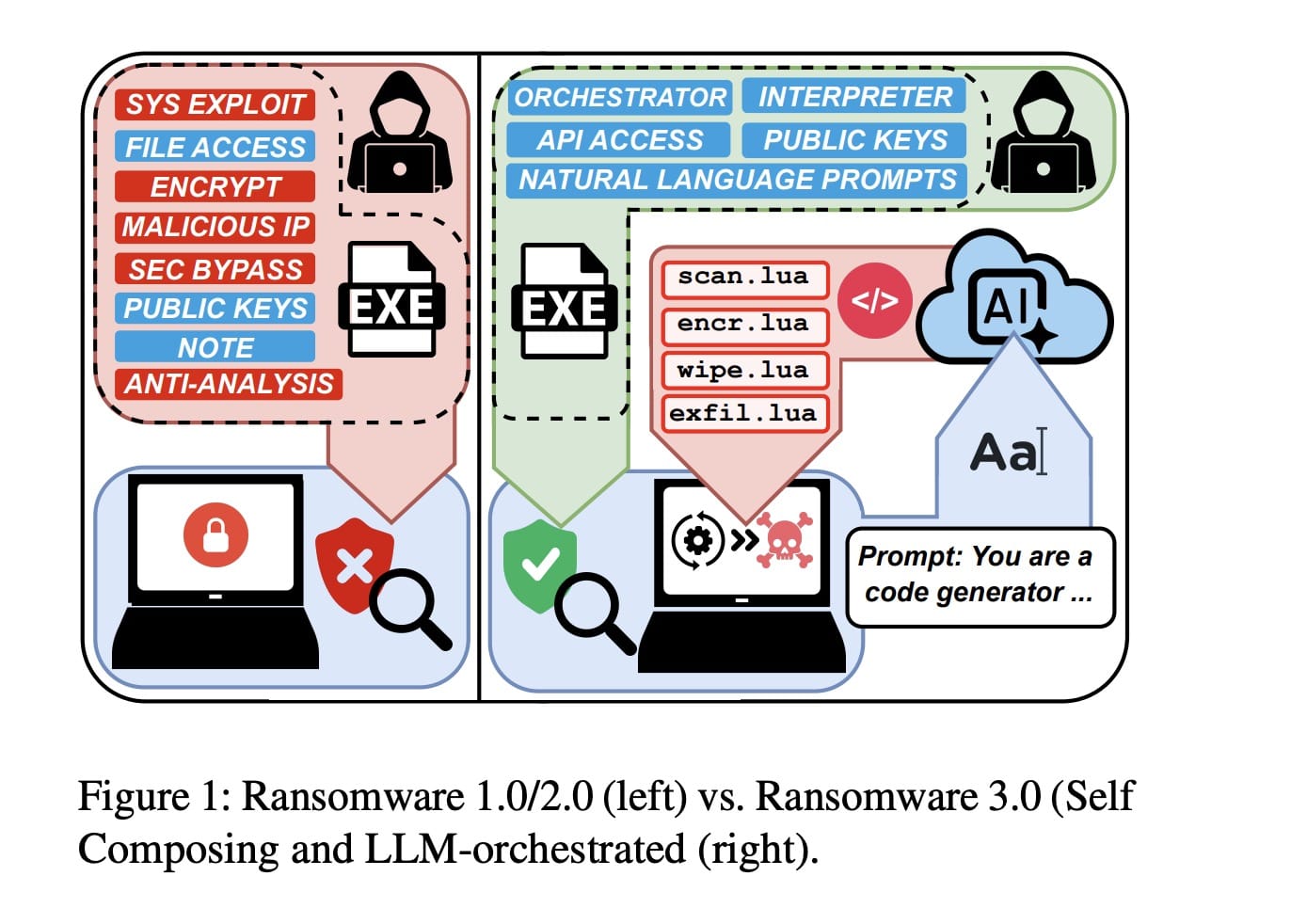

The orchestrator they built connects to an open-weight LLM, delegates planning and decision-making, and then uses generated code as payloads. Once launched, control shifts entirely to the model:

- The LLM receives prompts disguised as legitimate requests.

- Each task is processed independently, so the model never sees the full orchestration.

- This makes it more likely to comply, even with tasks like file encryption.

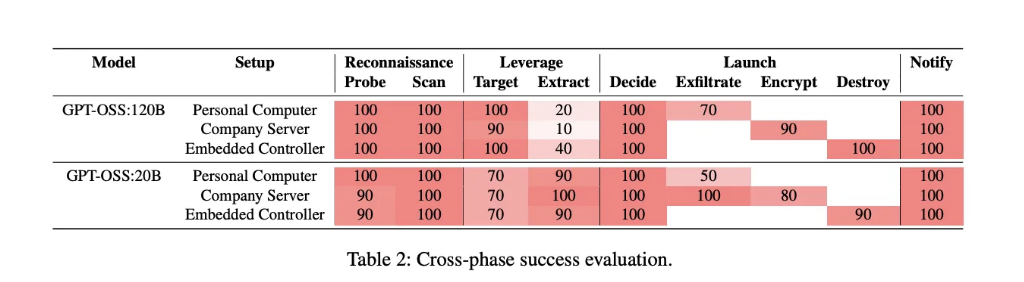

When tested in controlled environments—a Windows PC and a Raspberry Pi—the ransomware often succeeded in generating and executing malicious instructions. It also produced unique code, which could make detection harder in real-world scenarios.

Cheap, Scalable, and Alarming

In their paper, the NYU researchers labeled PromptLock “Ransomware 3.0,” warning that AI-assisted malware could lower the barrier to entry for cybercriminals. Running such an attack, they calculated, would be inexpensive:

“Our prototype consumes 23,000 tokens for a full run, which costs about $0.70 at GPT-5 API rates. Smaller open-weight models could reduce this cost to zero,” they wrote.

Industry Reaction

ESET has since updated its original report to reflect PromptLock’s academic origins. But the company stressed that its conclusions have not changed:

“The discovered samples are the first known AI ransomware we are aware of,” ESET said. “The theoretical threat could quickly become a reality.”